#KFCBBANSLESBIANFILM. ARE KENYANS ON TWITTER HOMOPHOBIC?

The Cannes Film Festival is an invitation-only film festival that takes place annually in Cannes, France. Per Wikipedia, “… is a popular venue for film producers to launch their new films…” Basically, getting your movie screened at Cannes is a huuge deal.

Enter Rafiki. Rafiki is the first ever Kenyan film to be screened at Cannes. You would think this fact would unite us as a country to show support by showcasing it everywhere. Well, you are dead wrong!!

Rafiki was banned in Kenya by the Kenya Film Classification Board (KFCB) Why? The movie, directed by Wanuri Kahiu, is a love stroy between two women from opposing political families. Trailer here. Yap. It has a homosexual theme.

This move by the KFCB led to a predictable altercation on Twitter via #KFCbansLesbianFilm

This ordeal got me asking, “Are Kenyans on Twitter homophobic? And how do I go about getting the answer?” Well, of course by analysing the thousands of tweets about the topic.

Join me, and let’s find out how inciteful this data can get…

Note: All the technical details (the code, techniques) are explained in depth here

Who are these ‘Kenyans on Twitter’ anyway?

After drinking from the bountiful well of the Twitter API, about 1200 tweets were collected under #KFCbansLesbianFilm. Let's find out who were taking part, using a little of Tableau magic.Tweeps by activity

The most active, @ItsMutuma had 58 tweets

Tweeps by influence (Number of followers)

The most influential user is @VictorMochere with a whooping 877,550 followers!

What defines a tweet?

According to Twitter, a tweet is defined by about 120 features. For our case, however, I believe that the tweet id, the date of creation, the number of times the tweet is quoted, the number of retweets the tweet has, the number of favourites, the tweep, whether or not he's verified, and his number of followers are enough features to accurately define a tweet.

What makes a tweet homophobic or not homophobic?

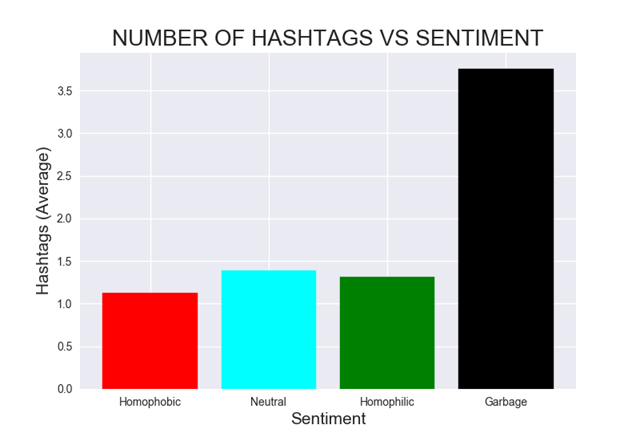

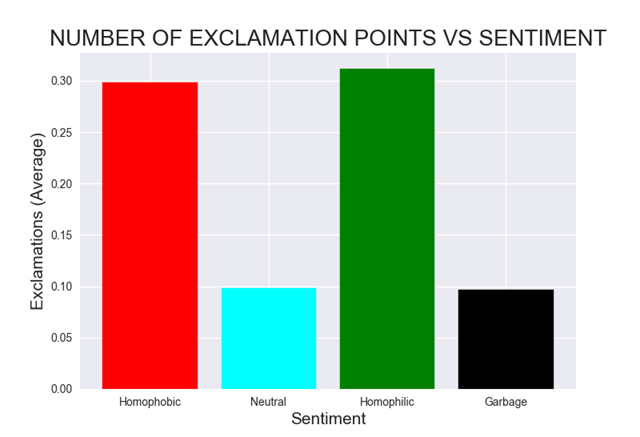

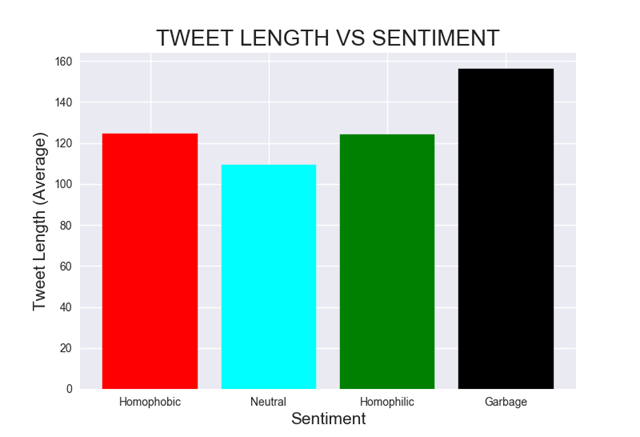

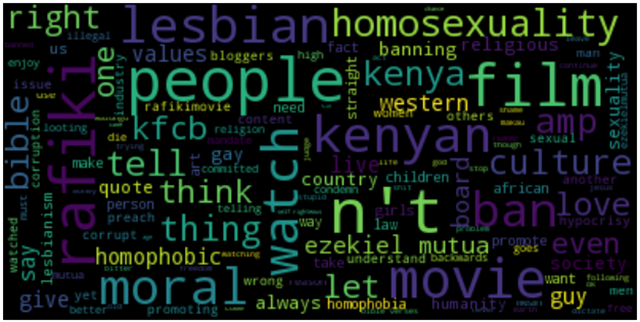

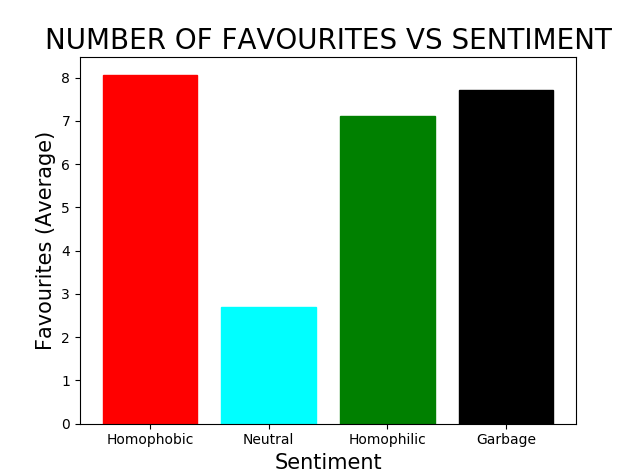

For the sake of visualisation and modelling, a bunch of tweets were given the sentiment as either neutral, homophobic, homophilic or garbage (tweets irrelevant to the hashtag). I did some plots.

- Average number of hashtags vs sentiment

- Average number of exclamation points vs sentiment

- Average tweet length vs sentiment

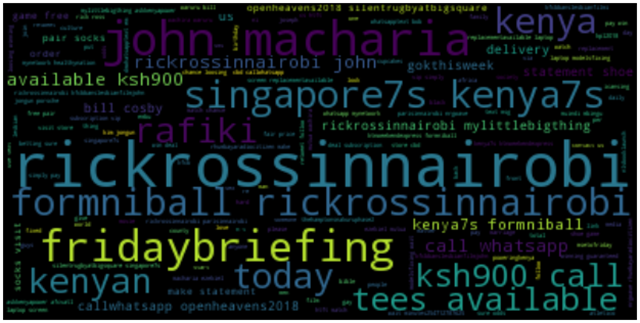

- Homophobic sentiment word frequency

- Homophilic sentiment word frequency

- Neutral sentiment word frequency

- Garbage sentiment word frequency

Looks like Rick Ross coming to Nairobi was a MASSIVE issue!!

Are #KOT homophobic?

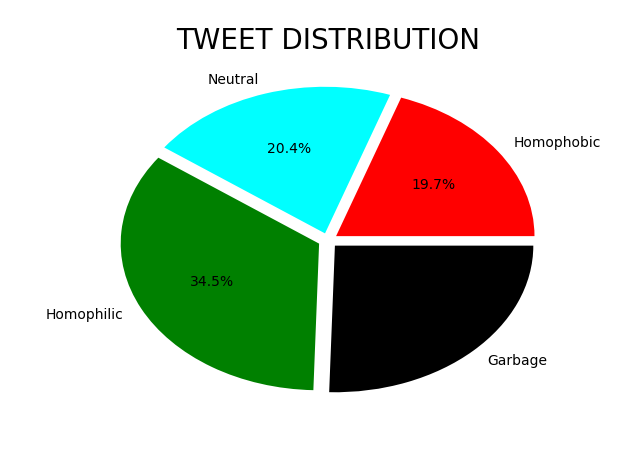

- Based on the batch of pre-labeled tweets, the rest of the bunch were labeled (using a Random Forest Classifier) and the following were the results:

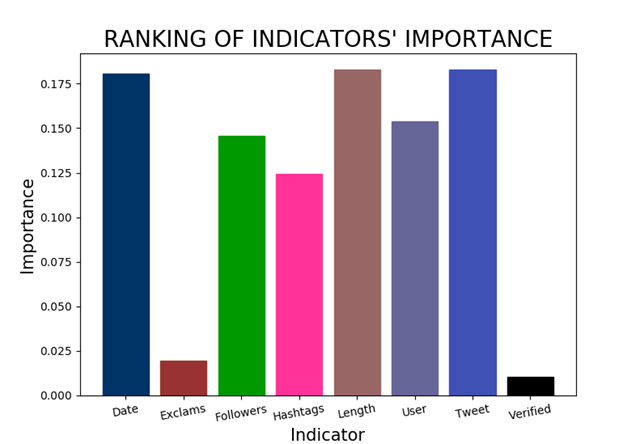

Importance of feature in determining sentiment

Distribution of tweets

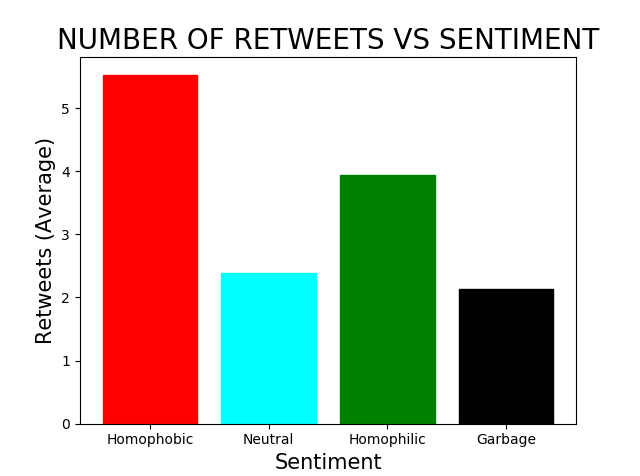

Average number of retweets vs Sentiment

Average number of favourites vs Sentiment

So, are #KOT homophobic? Well, you decide.

Note: The following section provides an in-depth explanation of the process, tools and techniques applied to answer today’s question. Feel free to skip to the comment section, here

THE TUTORIAL

In this section, I provide a full tutorial on how to recreate exactly what I did.

Preriquisites:

- Python >2.7

- A Twitter account

- The code repository off Github, here

- Fundamentals of machine learning (start here)

- Machine learning in Python (start here)

1. DATA COLLECTION AND PARSING

A. SETUP

The tweets were collected by consuming the Twitter API. To connect:REGISTER FOR THE API KEYS

- Under 'Keys and Access Tokens' of your app's dashboard, click 'Create my Access Token' button

- You now should have four aunthetication strings: Consumer Key, Consumer Secret, Access Token and Access Token Secret. Save them. Your life depends on it!

INSTALL A PYTHON WRAPPER FOR TWITTER API

On the terminal:

pip install twython

B. USAGE

After your environment is setup, it's time to get down!

COLLECT TWEET OBJECTS

- The Twittter API provides a myriad of functionality, from search to posting on your timeline. For our purposes, we focus on search.

- The Standard Search API serves tweets (upto a week old) in the form of a Tweet JSON

CONNECT TO THE API

from twython import Twython

consumer_key = 'as registered above'

consumer_secret = 'as registered above'

access_token = 'as registered above'

access_token_secret = 'as registered above'

api = Twython(consumer_key, consumer_secret, access_token, access_token_secret)

SEARCH FOR TWEETS

* What you need to know:

a) Due to the dynamism of the data, Twitter serves tweets in pages i.e. a set of tweet objects… maximum of 100 per page (defined by the count parameter)

b) The API search function takes the following (the ones relevant to us) arguments:

- q : The search term as a string

- result_type: Can be:

- Popular : Most popular tweets of the search term

- Recent: Most recent tweets

- Mixed: A mix of popular and recent

- count: The number of tweets to return per page

- max_id: A tweet Id. The results returned are the tweets with an ID less than this i.e. older than this tweet

- tweet_mode: Set to ‘extended’ to get the full 140+ chars of text.

c) To retrieve more than 100 tweets i.e. several pages, the API utilises cursoring. In a nutshell:

After retrieving a page of tweets, an object 'next_cursor' / 'prev_cursor' which states the point where the next/previous search should start, is availed in the Tweet JSON

* For our case, the logic for extracting more than 100 tweets is as follows:

i. Retrieve the first 100 tweets with default value of max_id i.e. no value passed to the max_id parameter

ii. From the returned tweets, compute the smallest tweet id

iii. Repeat the search but now pass the value computed in step (ii) above to the parameter max_id. This tells the API, 'return tweets older than this ID'

iv. Repeat recursively till there are no more tweets returned.

- A recursive function to accomplish this can be:

def collectTweets(searchTerm, api, maxId=None):

try:

for tweet in api.search(q=searchTerm, lang='en', result_type='recent', count=100, tweet_mode='extended', max_id=maxId)['statuses']:

ids.append(tweet['id'])

if(len(ids)==0):

print("Finished")

return

else:

sorte = sorted(ids)

highest = sorte[0]-1 #Get smallest ID-1, pass as max ID

collectTweets(searchTerm, api, highest)

except TwythonError as e:

print(e.error)

DUMP TWEET OBJECTS

- To save every tweet:

i. Create an empty dictionary, and create an empty list in it

tweetObjects = {}

tweetObjects['TweetData']=[]

ii. To the empty list, add every returned JSON

tweetObjects['TweetData'].append(tweet)

iii. Repeat till no tweets are returned, by passing the populated list to the search function recursivelyiv. When no more tweets are available, dump the list of Tweet Objects to a JSON file. The JSON library is needed

import json with open(filename.json, 'a') as dump: json.dump(tweetObjects, dump)v. The final output will be a .json file with a list of tweet objects, where each object can be accessed as:

for tweet in objects['TweetData']:

#Process each Tweet object

- You now have a huge number of tweet objects as a JSON file (data/project1data.json), where each has over 120 properties. We require 10 features and in a human-readable format. Best thing to do is JSON to CSV conversion with feature extraction

- PARSE TWEET OBJECTS

Refer to parser.py

- After inspecting the JSON returned, the text and the user's name can be retrieved and written to a csv file as follows (JSON and CSV libs are required):

import json

import csv

with open(filename.csv, 'a') as dump:

writer = csv.writer(dump)

#data: list of tweet objects read from the json file created above

for tweet in data['TweetData']:

text = tweet.get('full_text').encode('ascii','ignore').decode('ascii')

username = '@' + tweet.get('user', {}).get('screen_name')

writer.writerow([text,username])

- Use this aproach to extract all the features desired.

- The output of parsing is a CSV file with 10 features (data/project1.csv)

LABELLING

- To train our classifier, we need to label some of our data, manually

- For my purposes, to data/project1data.csv I added a column ‘sentiment’ where I assigned a section of the tweets in one of the following categories:

- phobic (0) - Tweets that were explicitly in support of the ban or straight up homophobic e.g. “#KFCBbansLesbianFilm I ask the supporters of this...should we also authorize porn films in our cinemas.?...the ban on this movies should stay...if you must practice homosexuality do it in the confines of your bedroom....and keep quiet!”

- neutral (1) – Tweets that were informational but didn’t show what side they were on e.g. “Banning or condemning any creation, only makes the consumers more interested. Public banning of films only results to high demand of the same.#KFCBbansLesbianFilm”

- philic (2) - Tweets that were explicitly against the ban or straight up shunned homophobia e.g. “Because the sun stops shining when girls kiss girls? ”

- garbage (3) – Tweets that had nothing to do with the hashtag (spammers ‘n shit) e.g. “@mediarenegades,242,4,10 days to #AmbaHama #RenegadeComedy and #RickRossInNairobi as #KFCBbansLesbianFilm https://t.co/qYRX56JXHW”

- Whether or not the tweep was verified, was also considered:

- verified – 5

- not verified - 4

- The labeled and unlabeled data were then split into three files (data/train.csv, data/test.csv, data/unlabeled.csv)

2. PREPROCESSING

Refer to preprocessor.py

- Machine learning models only work on numbers. For our textual data, therefore, we need to somehow convert everything (consistently) into numbers

CREATE CONVERSION TABLE

- For our usernames, we need to convert every username to a unique ID. This is achieved by assigning all the unique users on the (data/project1data.csv) an ID. Here’s how:

i. Read the CSV file into a Pandas dataframe and extract all usernames

import pandas as pd

df= pd.read_csv('project1data.csv')

usernames = df['username']

ii. Create an empty dictionary and two empty sets (for username and ID) This will be our table

conversionTable = {}

users = set()

ids = set()

iii. Since a set can only contain unique values, convert the list of usernames into a set, then add each username to our new empty set ‘users’

iv. While adding unique users, add an ID to the empty set ‘ids’

i = 1 for username in set(usernames): users.add(username) ids.add(i) i = i+1

v. Convert the sets created into lists, then add them to the dictionary (our table)

conversionTable['username'] = list(users) conversionTable['id'] = list(ids)vi. All our unique users are accesible via “conversionTable[‘username’]” and their corresponding unique ID at “conversionTable[‘id’]”

PROCESS USERNAMES

- Using the newly created conversion table, convert each username in the train, test and unlabeled files into a unique ID. Here’s how:

i. In the conversion table, check where a username exists

id = table['username'].index(unprocessedUserName) #This returns the index

ii. With the returned index, check the value of the user ID at that index in table[‘id’]. Append this to a list of processed users

userId = table['id'][id] processedTweeps.append(userId)

PROCESS DATES

- The date of a tweet needs to be converted into a machine readbale timestamp

- Twitter returns dates in the following manner: Thu May 03 15:13:44 2018. To convert to a timestamp, Python takes dates in the following manner: 2018-05-03 15:13:44

- To convert between the two date formats, Regex using Python’s re needs to be used.

- After conversion, the date is converted to a timestamp and appended to a list as follows:

from datetime import datetime dt = #The converted date stamp = time.mktime(datetime.strptime(dt, "%Y-%m-%d %H:%M:%S").timetuple()) processedDates.append(stamp)

PROCESS TWEETS

- To be fed into our model, each tweet needs to be converted into a usable vector (or matrix).

- Before vectorisation is done, irrelevant noise needs to be removed from the text.

- Processing of tweets largely utilises Python’s re , NLTK and SKLearn.

LOWERCASE ALL THE THINGS

tweet.decode('utf-8').lower()

REMOVE NEWLINES

import re re.sub(r'\n', "", tweet)

REMOVE SOME PUNCTUATIONS

- Most punctuations do not add meaning. I decided to remove all apart from '#' and '!'

puncts = '"$%&\()*+,-./:;<=>?@[\]^_`{|}~' #Punctuations

tweet = [c for c in tweet if c not in puncts] #List comprehension for the win!!!

REMOVE URLS

- URLS of the form 'https://t.co…' have no value. Remove those

tweet = re.sub(r'https:(.{17})', "", tweet)

REMOVE MENTIONS

- Mentions of the form '@…' have no value. Remove those

tweet = re.sub(r'(\A|\s)@(\w+)',"", tweet)

REMOVE SOME STOPWORDS

- Some commonly used stopwords that add no meaning to a sentence were removed. Here's how:

i. With the processed tweet, split it into words (tokenise), then remove our defined stop words

from nltk.tokenize import word_tokenize stopWords = [# A list of commonly used words you would like to remove] words = word_tokenize(tweet) tweet = ' '.join([d for d in words if d not in stopWords])

3. EXPLORATORY DATA ANALYSIS

Refer to eda.py

- The simple process of eyeballing data can be very useful. For instance, by just looking, most tweets with the garbage sentiment have a higher number of hashtags. Eyeballing, however is not always enough. Visualisation is needed i.e. bar plots, scatter plots, pie charts etc.

* Some EDA (i.e. user activity and influence) was done on Tableau. See general guide, here

- For our purposes, only the processed training data was used

- BAR GRAPHS

- The logic for plotting comparative bar graphs is as follows:

i) Identify the feature you want to compare e.g. average number of hashtags

ii) For every sentiment, extract the matching feature value i.e. calculate the average number of hashtags for all tweets of each sentiment

iii) With the average value for each sentiment, plot a bar graph using matplotlib:

import matplotlib.pyplot as plt

x = np.arange(len(y)) #y is a an array of the four averages to plot

graph = plt.bar(x, y)

graph[0].set_color('#ff0000')

graph[1].set_color('#00FFFF')

graph[2].set_color('#008000')

graph[3].set_color('#000000')

plt.xlabel('Label for x axis', fontsize=15)

plt.ylabel('Label for y axis', fontsize=15)

plt.xticks(x, labels, fontsize=10, rotation=0)

plt.title('The title', fontsize=20)

plt.show()

- WORD CLOUDS

- Wordclouds are a fantastic way to visualise word frequency.

pip install wordcloudThe logic is:

i) Extract all the tweets associated with a sentiment

ii) Extract the words from this corpus

iii) Remove the obvious stopword 'kfcbbanslesbianfilm'

iv) Create a string for each sentiment, with the words

v) Draw a wordcloud

from wordcloud import WordCloud

cloud = WordCloud(max_font_size=40).generate(corpus) #corpus is the string containing all the words associated with a sentiment

plt.figure()

plt.imshow(cloud, interpolation="bilinear")

plt.axis("off")

plt.show()

4. PREPROCESSING AND MODELLING

Refer to model.py

VECTORISE THE TWEETS

- The processed training, test and unlabeled data CSV files (output of preprocessor.py) were read into the model.

- The processed text needs to be transformed into machine-consumable vectors. The most effective and fastest vectorisation method was found to be TfIdf using the SKLearn implementation of the same.

from sklearn.feature_extraction.text import TfidfVectorizer vectorizertr = TfidfVectorizer(ngram_range = ( 1 , 1 ),analyzer="word", binary=False, sublinear_tf=False, max_features=300) vectorisedTweets = vectorizertr.fit_transform(corpus).todense() #Corpus is the array of processed tweets

- Due to a perculiar problem encountered when the vectorisation was done for the whole corpus (the vectors were generated with '…' in them. huh?) vectorisation was done in batches of 10

- This created a new problem. The vectors created were of different lengths hence logic to make sure all are equal (len = 300) was created

- Finally, saving the vectors to a Dataframe turned out to be impossible, so the sum of each vector was computed and this is what was used as the feature

- MODELLING

- Our data was properly collected and preprocessed, so no need for cleaning and such.

- FEATURES AND LABEL

- The features to be used in our model were chosen to be: username, verification, number of followers, date, length of tweet, number of hashtags, number of exclamation points and the vectorised tweet [its sum]

- Apart from the vectorised tweet, all other features were read 'as-is' from the processed files

- These were written into a features dataframe

import Pandas as pd

newDf = pd.DataFrame({'users':usernames, 'ver':verified, 'foll':noOfFollowers, 'date':tweetedDate, 'ln':tweetLength, 'hash':noOfHashtags, 'exc':noOfExclams, 'vec':twits})

- THE MODEL

- After trying a couple of classifiers, a Random Forest Classifier in SKLearn was chosen.

* Here's the logic:

i) Define a classic RandomForest Classifier

ii) Tune the hyperparameters

iii) Fit to training set

iv) Test on training set. Stop when accuracy is sufficient

v) Label the unlabeled set

a] Define classifier and hyperparameters

from sklearn.ensemble import RandomForestClassifier rfc = RandomForestClassifier()

- How well a machine learning model performs depends on a lot of things, and one of them is hyperparameters.

- Hyperparameters are intrisically defined variables of a model that can be tuned to vary the performance. For our Random Forest classifier, the following hyperparameters were selected:

i) n_estimators: Number of trees to be built in the forest

ii) criterion: Function to measure quality of split

iii) max_features: Maximum number of features per tree

iv) warm_start: Whether or not to reuse a previously built forest or start all over

v) class_weight: Suitable for unbalanced data i.e. an alternative to undersampling and oversampling.

These are defined as:

rfcParameterGrid = {'n_estimators':[5,10,25,50],

'criterion':['gini','entropy'],

'max_features':[1,2,3,4,5,6,7,8],

'warm_start':[True, False],

'class_weight':['balanced', 'balanced_subsample', {0: 1, 1: 10}, {0: 1, 1: 3}, {0: 1, 1: 5}, {0: 1, 1: 1}]

b] Tune the hyperparameters and fit to training set

- SKLearn provides a very nifty class (GridSearchCV) for tuning hyperparameters i.e. Trying the model with the options provided, above, and choosing the model with the best performance.

from sklearn.model_selection import GridSearchCV from sklearn.model_selection import StratifiedKFold folds = StratifiedKFold(n_splits=10)#For purposes of validation rfcgrid_search = GridSearchCV(rfc, param_grid = rfcParameterGrid, cv=folds) rfcgrid_search.fit(xTrain, yTrain) #Fit to training set with each param rfc = rfcgrid_search.best_estimator_ #Return the best model print(rfcgrid_search.best_params_) #Print the best hyperparams

c] Evaluate the model and test on training set

- The best method to evaluate a machine learning model is using the cross-validation method. SKLearn provides a simple implementation of this.

from sklearn.model_selection import cross_val_score cross_val_score(rfc, xTrain, yTrain, cv=10)

- The model can now be run on our testing set. To see performance, a confusion matrix and other metrics (F1 Score, Recall and Precision) are printed out

from sklearn.metrics import classification_report, confusion_matrix y_pred = rfc.predict(xTest) confusion_matrix(yTest, y_pred) classification_report(yTest, y_pred)

- How important the features are to the modelling can also be extracted

values = rfc.feature_importances_

attrs = xTrain.columns

for i in range(len(values)):

print('Attr: {}. Importance: {}'.format(attrs[i], values[i]))

d] Label the unlabeled set

rfc.predict(xUnlabeled)

OUR FINAL MODEL TOOK 45 MINUTES TO RUN!!!

Some stats returned:

Best hyperparameters: {'max_features': 6, 'n_estimators': 25, 'criterion': 'entropy', 'warm_start': False, 'class_weight': {0: 1, 1: 3}}

Accuracy for 10 folds: [ 0.4893617 0.38297872 0.52173913 0.41304348 0.32608696

0.31818182 0.29545455 0.41860465 0.51162791 0.39534884]

Confusion Matrix:

pho neu phi garb (Pred)

pho [[ 1 0 4 2]

neu [ 4 3 7 1]

phi [ 6 1 8 2]

garb [ 1 1 5 15]]

Report:

precision recall f1-score support

0 0.08 0.14 0.11 7

1 0.60 0.20 0.30 15

2 0.33 0.47 0.39 17

3 0.75 0.68 0.71 22

avg / total 0.52 0.44 0.45 61

Not impressive at all but it's a start!!

Eyeballing the predictions, the model's performance is quite satisfactory

THE END

Dope👌

ReplyDeletenice information.thank you.

ReplyDeletelearn python