IS YOUR CROWDFUNDING CAMPAIGN GOING TO SUCCEED?

If you have lived in Kenya as long as I have, the term 'Harambee' should, at the very least, ring a bell. 'Harambee' is a Swahili term that literally means 'all pull together'. If you need help, be it money-wise, labour-wise… it doesn't matter, all you have to do 'ni kuita Harambee' and your community's got you!! Harambee is such a revered activity in Kenya, it's our country's official motto! (I bet you didn't know that) *To be clear, I do realise pulling together is not exclusively Kenyan*

So, with Harambee's prevelance, someone must have decided to bring it into the digital world. Yes, they did. So, what do you get when you cross our spirit of Harambee with technology? You build massive platforms and call it crowdfunding! Kickstarter, Indiegogo, Crowdsupply, GoFundme etc. There's a tonne of these crowdfunding platforms on the web. If you have an idea that you'd like to execute but do not have the capital to do that, you have a simple basic formula to follow:

i) Sign up on any of the platforms. The HowTos depend on which platform you choose. Remember: These platforms usually come at a cost to use.

ii) Prepare an attractive 'pitch' for your idea. This usually includes a video explaining what the product is, graphics etc. Just, sell your product.

iii) State the amount of money you'd like to raise, your goal and by when.

iv) Users of said platform, if attracted to your idea, decide to back you. Basically, 'wanakuchangia'

v) Depending on the platform, you may need to provide incentives for backing e.g. the product, equity etc. More details here.

vi) On most of the platforms, it's 'all or nothing'. You either reach your goal and/ or surpass your goal, or you don't get any money AT ALL.

It goes without saying that it'd be quite neat if you met your goal. So, what factors determine whether or not you reach your goal? A yuuuuuge number of articles on how to have a successful crowdfunding campaign have been written but most of them take the format of 'How to manipulate people psychologically to like your product and throw money at it' rather than take an objective look at what has worked before and draw insights from them.

Those who do not know history's mistakes are doomed to repeat them – George Santayana

How do you take an objective look? You collect the data about any and all crowdfuding campaings that have happened in the past, analyse it and draw insights. For fun, I'll predict whether or not 24 new campaigns (as of June 30th 2018) will be successful. An update to this post shall follow, to check how accurate the predictions are. An accuracy of >=80% shall grant me permission to change my name to Kelvin 'The Prophet' Gakuo.

Updates (Aug 28th 2018)

I have checked back on the projects. The actual outcome is appended to the predictions table as follows

| Project Name | Link | pred outcome |

| Gafas de Sol Ecol\u00f3gicas | Carpris Sunglasses | https://kck.st/2tOXFNn | 0 0 |

| Project for soyamax join to JAPAN EXPO 2019 in Paris | https://kck.st/2Kuxkyn | 0 1 |

| The Witch's Cupboard: Potions & Ingredients Hard Enamel Pins | https://kck.st/2Mys2Q0 | 1 1 |

| Ultra Entertainment : A Business | https://kck.st/2KkQiby | 0 0 |

| Karama Yemen Human Rights Film Festival | https://kck.st/2Ky8wlM | 0 0 |

| The Witches Compendium: A guide to all things Wicca | https://kck.st/2KCoVWn | 0 0 |

| Quickstarter: CELIE & COUCH | https://kck.st/2NdDn9l | 0 0 |

| Tiger Friday | https://kck.st/2KzOUBg | 0 1 |

| Vainas De Vainilla - Vanilla Bean | https://kck.st/2tT2dSG | 0 0 |

| \"Simone de Beauvoir\" Libro Ilustrado/Picture Book | https://kck.st/2yTg4yl | 0 0 |

| Artist Lost/Heiress Denied - A True Story | https://kck.st/2yWJPhG | 0 0 |

| Stolen Weekend's debut EP | https://kck.st/2MDbHJx | 0 0 |

| Maidens of the dragon, 28mm quality pewter miniatures. | https://kck.st/2NbJGtW | 0 1 |

| Little Tumble Romp Indoor Sensory Center | https://kck.st/2KAtaBO | 0 0 |

| Watercolour Style - Nebula Washi Stickers | https://kck.st/2KAZogy | 0 0 |

| HOWPACKED.COM New nightlife monitoring website version 1 | https://kck.st/2yWJMT2 | 0 0 |

| Boob Planter Pins | https://kck.st/2lNmup7 | 0 1 |

| Operation: Boom - Issue 3 | https://kck.st/2Mvu576 | 0 1 |

| Rapture VR | https://kck.st/2N793gt | 0 0 |

| Goos'd - Bring the party and Get Goos'd! | https://kck.st/2KyVt3P | 0 0 |

| Smuggles n' Snuggles | https://kck.st/2Kxb4Rb | 1 1 |

| Makeshift | https://kck.st/2tTddj0 | 0 0 |

| Creative Community Project | https://kck.st/2Ku6LcG | 0 0 |

| Opening a fabric store in Downtown Kent! | https://kck.st/2yVEC9P | 0 0 |

Of the 24 projects, 5 were predicted incorrectly. So, that gives us an accuracy of 79.17%. So close!!!!

Join me, and let’s find out how inciteful this data can get…

Note: All the technical details (the code, techniques) are explained in depth here

The data

To answer our question, data was extracted from two of the most popular crowdfunding platforms: kickstarter.com and indiegogo.com. The data was a couple of thousands of campaigns from June 2017 to June 2018 on each platform, each adequately described by a good number of features

Kickstarter vs Indiegogo

To understand how the crowdfunding world behaves, it's worth it to compare the two most popular platforms on some metrics

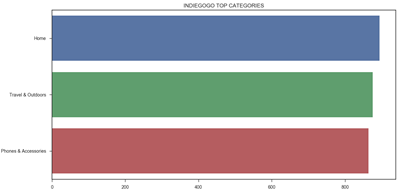

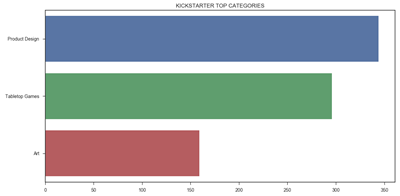

- Most populated categories (have the most number of campaigns)

* Indiegogo: Home, Travel & Outdoors, Phones & Accessories

* Kickstarter: Product Design, Tabletop Games, Art

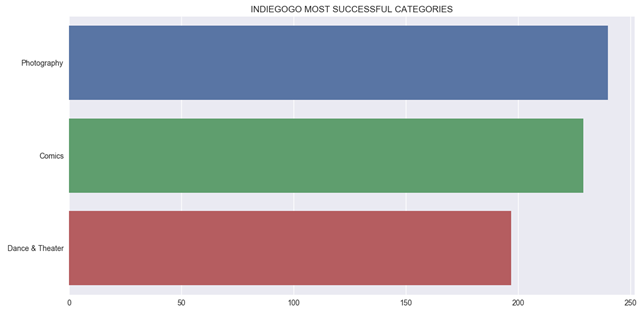

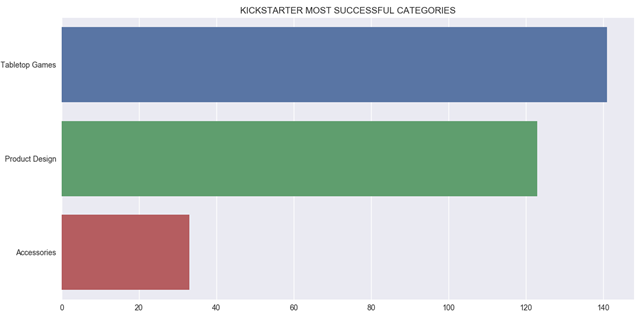

- Most successful categories

|  |

* Indiegogo: Photography, Comics,Dance & Theater

* Kickstarter: Product Design, Tabletop Games, Accessories

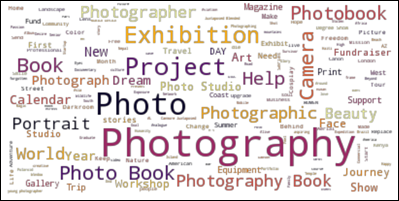

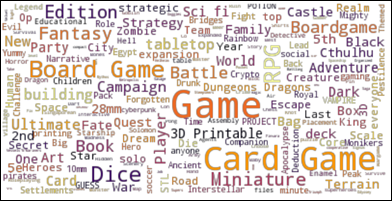

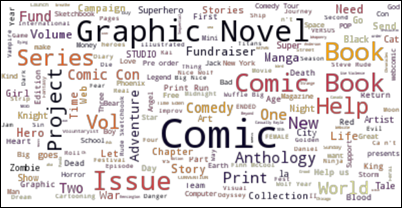

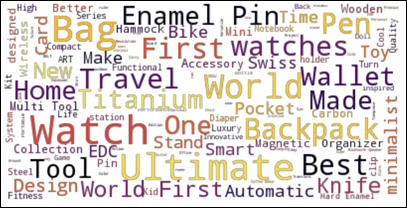

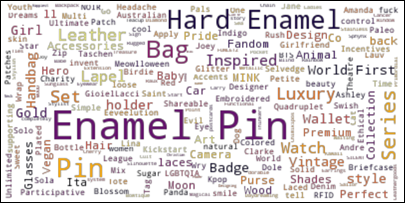

- Most popular words used in campaign titles for the top categories

Looks like everyday there's a new Ultimate product seeking funding on Kickstarter. And people seem to loooove their enamel pins!

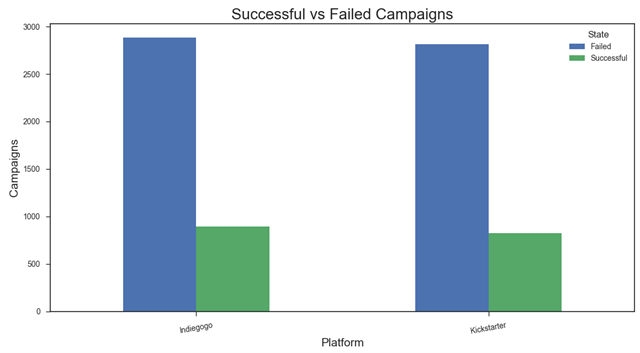

- Successful vs failed campaigns

Kickstarter users and locations

Kickstarter provides a comprehensive defintion of the campaigners i.e users who have started a campaign and where the campaign is located. I decided to dig in

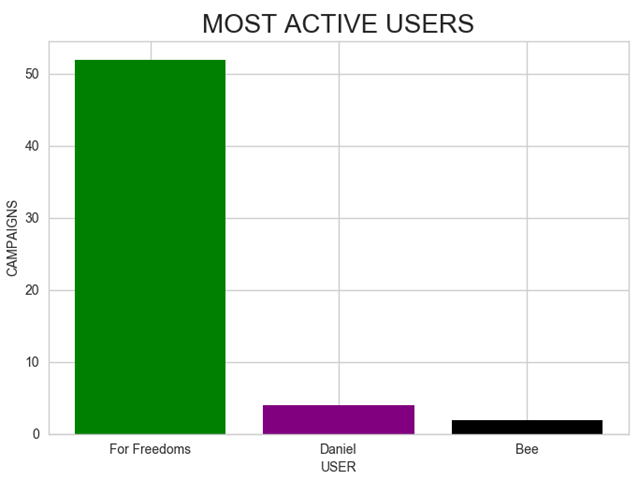

- Most active users (By number of campaigns)

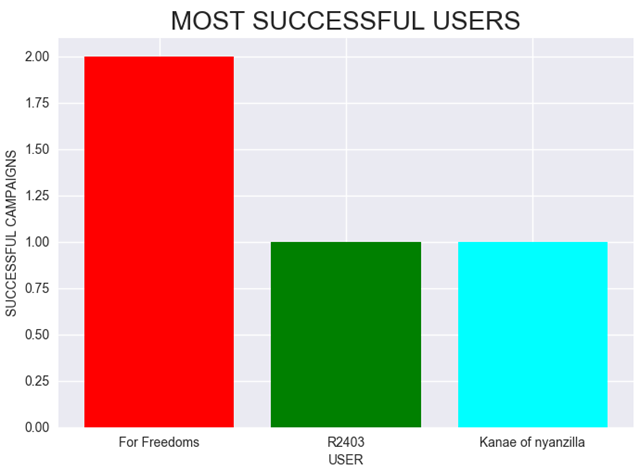

For Freedoms is seriously on the grind!

- Most successful users (By most number of successful campaigns)

For Freedoms' grind seems to be paying. However, notice that only 3.846% of the attempts were successful

Location of campaign

psst… You can click on the countries

How are there no projects hosted from Kenya?!?! I thought we went digital and stuff.

The most successful project

The most successful project with a goal of $35 and a whooping $5429 in funding is by Joe Magic Games. Read about the campaign, here

So, what determines success?

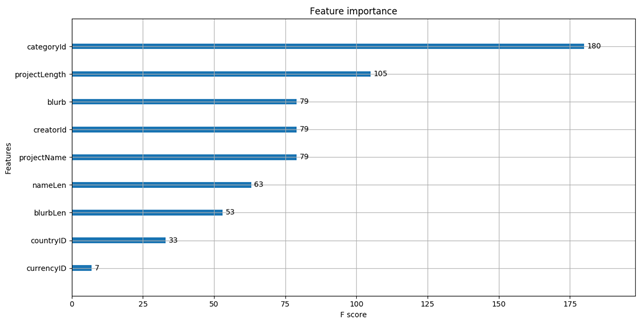

Excluding the most obvious indicators of success, such as the number of backers (pple wiliing to fund your project) and the difference between the goal and pledged amount, the other indicators were put through XGBoost algorithm, and their importance computed and plotted.

Clearly, the category of your campaign, how long the campaign is on for and the text describing what the campaign is about, the creator and the name matter the most.

Shakespeare, there definitely is something in a name!!

Surprisingly, the country of origin has very little impact!! Take your shot, you never know.

Let's do prophecy!!!

I decided to collect 24 of the newest projects as of 30th June and predict whether they'll be successful (1) or unsuccessful (0). The results were as follows:

| Project Name | Link | Prediction |

| Gafas de Sol Ecol\u00f3gicas | Carpris Sunglasses | https://kck.st/2tOXFNn | 0 |

| Project for soyamax join to JAPAN EXPO 2019 in Paris | https://kck.st/2Kuxkyn | 0 |

| The Witch's Cupboard: Potions & Ingredients Hard Enamel Pins | https://kck.st/2Mys2Q0 | 1 |

| Ultra Entertainment : A Business | https://kck.st/2KkQiby | 0 |

| Karama Yemen Human Rights Film Festival | https://kck.st/2Ky8wlM | 0 |

| The Witches Compendium: A guide to all things Wicca | https://kck.st/2KCoVWn | 0 |

| Quickstarter: CELIE & COUCH | https://kck.st/2NdDn9l | 0 |

| Tiger Friday | https://kck.st/2KzOUBg | 0 |

| Vainas De Vainilla - Vanilla Bean | https://kck.st/2tT2dSG | 0 |

| \"Simone de Beauvoir\" Libro Ilustrado/Picture Book | https://kck.st/2yTg4yl | 0 |

| Artist Lost/Heiress Denied - A True Story | https://kck.st/2yWJPhG | 0 |

| Stolen Weekend's debut EP | https://kck.st/2MDbHJx | 0 |

| Maidens of the dragon, 28mm quality pewter miniatures. | https://kck.st/2NbJGtW | 0 |

| Little Tumble Romp Indoor Sensory Center | https://kck.st/2KAtaBO | 0 |

| Watercolour Style - Nebula Washi Stickers | https://kck.st/2KAZogy | 0 |

| HOWPACKED.COM New nightlife monitoring website version 1 | https://kck.st/2yWJMT2 | 0 |

| Boob Planter Pins | https://kck.st/2lNmup7 | 0 |

| Operation: Boom - Issue 3 | https://kck.st/2Mvu576 | 0 |

| Rapture VR | https://kck.st/2N793gt | 0 |

| Goos'd - Bring the party and Get Goos'd! | https://kck.st/2KyVt3P | 0 |

| Smuggles n' Snuggles | https://kck.st/2Kxb4Rb | 1 |

| Makeshift | https://kck.st/2tTddj0 | 0 |

| Creative Community Project | https://kck.st/2Ku6LcG | 0 |

| Opening a fabric store in Downtown Kent! | https://kck.st/2yVEC9P | 0 |

Pretty bleak, ey? See you on September 1st 2018 as I check how accurate the predictions are

Note: The following section provides an in-depth explanation of the process, tools and techniques applied to answer today’s question. Feel free to skip to the comment section, here

THE TUTORIAL

In this section, I provide a full tutorial on how to recreate exactly what I did.

Preriquisites:

- Python >2.7

- The code repository off Github, here

- A background in web development

- How AJAX works (start here) and workings of infinite scrolling (here)

- Basics of web crawling using Scrapy (start here)

- Fundementals of machine learning (start here)

- Machine learning in Python (start here)

1. DATA COLLECTION AND CLEAN-UP

A. SETUP

- For this project, Scrapy was the primary data collection tool. To set-up

pip install scrapy

- To start a Scrapy project and move into it:

scrapy startproject ScraperName cd ScraperName

B. INDIEGOGO

Refer to indiegogoWrangler.py

- Due to how indiegogo.com it was not possible to perform efficient crawling (as you'll witness on kickstarter). However, raw datasets exist on the internet and one was gotten as a CSV file at goo.gl/6pPCJb

- Our code file takes the CSV dump, extracts our desired features and dumps them to a JSON file, using Pandas

READ CSV FILE AND EXTRACT RELEVANT COLS

import pandas as pd dump = pd.read_csv('data/'+inputFile) #A few of the relevant features ids = dump['project_id'].tolist() names = dump['title'].tolist()

- WRITE TO JSON

import json obj = {} obj['projects'] = [] for j in range(len(ids)): item = {} #Empty project object #A few relevant attrs item['projectId'] = ids[j] item['projectName'] = names[j] #Append populated project object to objects list obj['projects'].append(item) #Write objects to JSON file with open('data/'+outputFile, 'a') as dp: json.dump(obj, dp)

C. KICKSTARTER

Refer to KickstarterWrangler/

- There are two ways to scrape a website:

- Screen scraping: Visit the URL, and extract information from the displayed HTML

- Crawling: Figure out how data is moved around i.e. how and where data is displayed as HTML then write a spider to mimic this behaviour * Websites that use infinite scrolling are just begging to be crawled!!

- In a nutshell, here's how most website implement infinite scrolling:

- Initiate an AJAX call based on a click or scroll trigger

- The AJAX call passes parameters (usually page number) to the backend

- The backend returns a JSON file containing the data based on said parameters

- The JSON file is parsed appropriately and the contents displayed as HTML

Word to the wise: Whenever you see a website utilises infinite scrolling, $100 says a scrolling event is triggering an AJAX call that returns a JSON file with the data.

- kickstarter.com utilises the same logic, which I used to my advantage as follows:

- Trigger an AJAX call passing the appropriate parameters

- I did some digging and found a couple of things:

- The AJAX call is passed using a URL in the form https://www.kickstarter.com/discover/advanced?google_chrome_workaround&woe_id=0&sort=magic&seed={—A 7 digit seed--}&page={—An integer—}

Refer to KickstarterWrangler/KickstarterWrangler/spiders/kickstarteritems.py

import json import scrapy class GetKickstarterItems(scrapy.Spider): name = 'kickstarteritems' baseURL ="https://www.kickstarter.com/discover/advanced?google_chrome_workaround&woe_id=0&sort=magic&seed=%07d&page=%05d"%(2547000, 00001) start_urls = [baseURL] def parse(self, response): #Scrapy returns an object 'response' containing data returned by server data = json.loads(response.body)

- For the AJAX call to work properly, request headers need to be set. Under KickstarterWrangler/KickstarterWrangler/settings.py, set:

DEFAULT_REQUEST_HEADERS = {

'Accept': 'application/json, text/javascript, */*; q=0.01',

'Accept-Encoding': 'gzip, deflate, br',

'Accept-Language': 'en-US,en;q=0.5',

'Connection': 'keep-alive',

'DNT': 1,

'Host': 'www.kickstarter.com',

'X-Requested-With': 'XMLHttpRequest'

}

Parse the returned JSON file to get the relevant data pointsfor project in data.get('projects', []): #Parse project JSON

item = dict()

# A few relevant attrs

#Project Details

item['projectId'] = project.get('id')

item['projectName'] = project.get('name')

#Creator details

item['creatorId'] = project.get('creator', {}).get('id')

yield item #Scrapy command to return the populated object

- The logic above will only return data from the first page only i.e only the first 12 items. To overcome this and get as many pages as possible:

- Each JSON file returned has an attr 'has_more' where if set to 'True' means there are other retrievable JSONs. Therefore, increment the page number by one, then parse the new response

currentPage = response.url #The current URL being crawled pageNumber = int(currentPage[-5:]) #Extract current page number as last 5 chars on the URL currentSeed = int(currentPage[-18:-11])#Seed is 7 chars, 11th from last char if (data['has_more']): nxt = pageNumber + 1 seed = currentSeed getNxtJSON = "https://www.kickstarter.com/discover/advanced?google_chrome_workaround&woe_id=0&sort=newest&seed=%07d&page=%05d"%(seed, nxt) time.sleep(5) #Sleep for five seconds then go to the next page yield scrapy.Request(url=getNxtJSON , callback=self.parse)

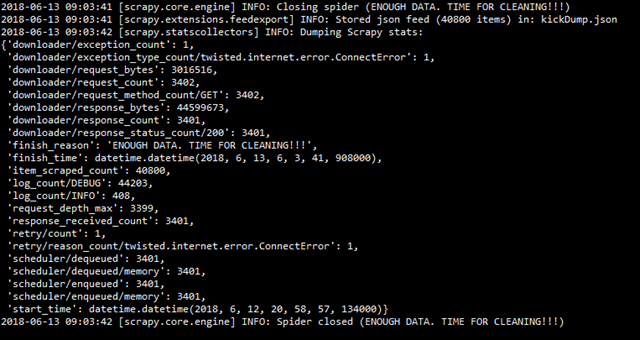

- The code above will only return 200 pages. Why? Kickstarter has set a restriction whereby a single seed value can only return 200 pages. To circumvent this:

- For a starting seed value e.g. 2547000, extract all 200 pages (2400 items)

- At page number 200, increment the seed by a random int, reset page number to 1 then crawl for the data

- When the seed value gets to a predefined value e.g. 2548000, it's time to stop!!!

- This method will produce A LOT of redudant data that will be cleaned later

import random if(pageNumber==200): nxt = 1 var = random.randint(1, 100) seed = currentSeed + var #Increment by a random value if(seed <= 2548000): getNxtJSON = "https://www.kickstarter.com/discover/advanced?google_chrome_workaround&woe_id=0&sort=newest&seed=%07d&page=%05d"%(seed, nxt) time.sleep(600) #Sleep for 10 minutes before using the next seed from page 1 yield scrapy.Request(url=getNxtJSON , callback=self.parse) else: #Enough seeds, stop!! raise CloseSpider('ENOUGH DATA. TIME FOR CLEANING!!!') #Close the spider

The spider's output was:

LABELLING

A campaign where the ratio of pledged to goal amounts is >= 1.0, is considered successful. This can be easily computed on a dataframe as follows:

import pandas as pd df['ratio'] = df['pledged'] / df['goal']

The data is then labeled:

df['label'] = np.where(df['success'] >= 1, 1, 0) #1: Successful, #0: Unsuccessful2. PREPROCESSING

Refer to preprocessor.py

ML models only work with numerical data. We therefore need to preprocess any categorical data into usable features. For our data the following needed to done:

- Compute length of campaign

The length is computed as the time between the deadline and the launch, duh!

df['projectLength'] = df['deadline'] - df['launch']

- Assign countries and currencies to unique IDs

This is done using Pandas assign method and cat.codes. Read more here

df =df.assign(countryID=(df['country']).astype('category').cat.codes)

df =df.assign(currencyID=(df['currency']).astype('category').cat.codes)

#Two new columns, 'countryID' and 'currencyID' are created

- Remove stop words from blurb and name

Commonly used English words that don't add a lot of meaning need to be removed.

from nltk.corpus import stopwords

stopWords = stopwords.words('english')

df['processedName'] = df['projectName'].apply(lambda x: ' '.join([word for word in x.split() if word not in (stopWords)]))

df['processedBlurb'] = df['blurb'].apply(lambda x: ' '.join([word for word in x.split() if word not in (stopWords)]))

- Engineer some new features

The blurb and name lengths were computed to have more features per sample

df['blurbLen'] = df['processedName'].apply(lambda x: len(x)) df['nameLen'] = df['processedBlurb'].apply(lambda y: len(y))

* The Pandas 'apply' method, takes a function that is run on every item in a row or column. Read more here

- Remove unwanted columns

After converting the categorical into numerical, you want to remove the unwanted features (columns):

df.drop(['state','projectId', 'categoryName', 'creatorName', 'success'], axis=1, inplace=True) #Add as many columns as you want to the list

3. EXPLORATORY DATA ANALYSIS

- KICKSTARTER EDA

Refer to eda.py

- USER ACTIVITY

- Create a new column with the count of each creator

tf['count'] = tf.groupby('creatorName')['creatorName'].transform('size')

- Uniquefy the dataframe by creator

tf.drop_duplicates('creatorName', keep='first', inplace=True)

- Sort the dataframe according to the count

sf = tf.sort_values('count', ascending=False)

- Plot the top three entries

lbls = sf[0:3]['creatorName'].tolist()

y = sf[0:3]['count'].tolist()

x = np.arange(len(y))

plt.bar(x, y, color=['Green', 'Purple', 'Black'])

plt.xticks(x, lbls)

plt.xlabel('USER', fontsize=10)

plt.ylabel('CAMPAIGNS', fontsize=10)

plt.title('MOST ACTIVE USERS', fontsize=20)

sns.set()

plt.show()

- USER SUCCESS

- Compute success levels of each user

kf['success'] = kf['pledged'] / kf['goal']

- Extract only the successful rows i.e. success >=1.0

sf = kf[kf['success'] >= 1]

- Count number of times a creator appears

sf['count'] = sf.groupby('creatorName')['creatorName'].transform('size')

- Uniquefy the dataframe by creator

sf.drop_duplicates('creatorName', keep='first', inplace=True)

- Sort according to count

gf = sf.sort_values('count', ascending=False)

- Plot the top three entries

lbls = gf[0:3]['creatorName'].tolist() y = gf[0:3]['count'].tolist() x = np.arange(len(y)) #plot the barplot here:

- CAMPAIGN BY LOCATION

- Count the number of times a country appears

fd['count'] = fd.groupby('country')['country'].transform('size')

- Uniquefy the dataframe by country

fd.drop_duplicates('country', keep='first', inplace=True)

- Sort according to count

fd = fd.sort_values('count', ascending=False)

- Write to CSV file

fd.to_csv('data/countries.csv')

- Import the CSV file in a Tableau viz

- Add 'country' to rows and 'count' to the size mark

- Select the world heat map viz

- Publish to your Tableau server

- Share to extract the embed code

- INDIEGOGO VS KICKSTARTER

Refer to indieVSkick.py

The EDA in this section was done using raw Python i.e. splitting a DataFrame into lists and manually doing comparisons 'n shit. This is a very tedious, inefficient process that doesn't utilise the power of Pandas as a data analytics library.

I therefore will not go through how things were executed here, but advise that you do your own comparisons using Pandas only as I have done in 'Kickstarter EDA' above

4. MODELLING

Refer to model.py

Couple of things first:

i) Since I needed to compute the importance of features in determine success, some form of Decision Trees needed to be used. I chose to use Extreme Gradient Boosted Trees (XGBoost)

ii) The data is very biased towards unsucessful campaigns. Warning: May overfit

- TRAINING

- WORD2VEC

First things, first. We need to convert the blurb and project names into usable numeric data. A TfIdf Vectoriser is perfect for the job!

from sklearn.feature_extraction.text import TfidfVectorizer vec = TfidfVectorizer(ngram_range=(1,1), analyzer='word', max_df=1.0, binary=False, sublinear_tf=False) df['nameToVec'] = list(vec.fit_transform(df['processedName']).toarray()) df['blurbToVec'] = list(vec.fit_transform(df['processedBlurb']).toarray())

You will notice that XGBoost only works with ints, floats etc. but the vectoriser produces vectors only. A decision to compute the sum of the vector as the feature instead was reached.

df['projectName'] = df['nameToVec'].apply(lambda x: x.sum()) df['blurb'] = df['blurbToVec'].apply(lambda y: y.sum()) df.drop(['processedName', 'processedBlurb', 'nameToVec', 'blurbToVec'], axis=1, inplace=True) #'projectName' and 'blurb' will now contain the sum of the vector form of each name and blurb

- THE MODEL

The features were chosen to be all other columns except for 'goal', 'pledged', and the number of backers, since these are veeeery obvious indicators of success. Obviously the label is not a feature

df.drop(['pledged', 'goal', 'backers'], axis=1, inplace=True) #Exclude the obvious markers of success x = df[df.columns[df.columns != 'label']]

The target was the 'label' feature

y = dfTrain['label']

Split the data

XTrain, XTest, yTrain, yTest = train_test_split(x, y, test_size=0.33, random_state=42)

Initialise and fit the classifier

from xgboost import XGBClassifier sgd = XGBClassifier() sgd.fit(XTrain, yTrain)

Note that XGBoost doesn't come prepackaged with SKLearn hence you'll need to:

pip install xgboost

- METRICS

Compute the cross-validation accuracy of the model:

from sklearn.model_selection import cross_val_score

from sklearn.metrics import classification_report, confusion_matrix

print('Accuracy for 10 folds: {}'.format(cross_val_score(sgd, XTrain, yTrain, cv=10)))

Output:

Accuracy for 10 folds: [ 0.7755102 0.77868852 0.77459016 0.76229508 0.7704918 0.76131687 0.781893 0.7654321 0.77366255 0.77777778]

Plot feature importance

from xgboost import plot_importance plot_importance(sgd) plt.show()

- TESTING

On the test set, predict

y_pred = sgd.predict(XTest)

Compute the confusion matrix

print('Confusion Matrix:\n {}'.format(confusion_matrix(yTest, y_pred)))

Output:

Confusion Matrix:

[[909 15]

[256 21]]

Compute the classification Report

print('Report:\n {}'.format(classification_report(yTest, y_pred)))

Output:

Report:

precision recall f1-score support

Unseccessful 0.78 0.98 0.87 924

Successful 0.58 0.08 0.13 277

avg / total 0.73 0.77 0.70 1201

- PREDICTING

On a bunch of new unlabeled data, label and write to file

#Label unseen data

preds = sgd.predict(dfUnlabeled)

dfUnlabeled['label'] = preds

dfUnlabeled.to_json('data/'+outFile)

THE END

I do not know if it's just me or if everyone else experiencing problems with your site. It appears as though some of the text within your content are running off the screen. Can somebody else please provide feedback and let me know if this is happening to them as well? This might be a problem with my browser because I've had this happen before. Appreciate it

ReplyDeleteEaster is a holiday celebrates the resurrection of Jesus Christ from the dead. It is typically observed on the first Sunday following the first full moon after the spring equinox. Easter is one of the most important holidays in the Christian calendar and is celebrated by millions of people around the world.Dig my cart Easter coupon discount is live on website.Easter coupons vouchers or promo codes that offer discounts or special deals during the Easter holiday.

ReplyDelete